Vision-Based Teleoperation for a Robotic Hand-Arm System

Introduction#

Teleoperation is an essential tool for performing complex

manipulation tasks that require dexterity and precision

with robots. It has a broad range of applications such as

operating robots in remote or dangerous environments and

collecting demonstrations for learning purposes. In particular

when it comes to highly dexterous robotic systems, such as

humanoid hands, employing conventional methods for collecting

expert demonstrations, e.g. using

kinesthetic teaching

can be extremely hard.

Usually, teleoperation approaches include an operator who

controls the robot’s movements and actions using a set of

input devices, such as

a haptic device,

a motion-tracking glove,

or a custom hardware solution.

Recently, due to the advancements in the computer vision field,

vision-based teleoperation has gained traction

(Sivakumar et al. 2022,

Arunachalam et al. 2023).

In this case, a visual input device, such

as a depth or RGB camera, is used to analyze the movements of

the operator and transfer them to the robot. Using a visual

device can be more cost-effective and allows the operator

to have more freedom in their movements.

Here, we focus on a vision-based teleoperation solution

using a single RGB camera. The target system is a robotic

platform that consists of a humanoid robotic hand mounted on a

robotic arm. More specifically, we use the

Kinova Kortex Gen3 arm and for the hands the

Seed Robotics RH8D

hand which has 7 DoFs, and the Pisa/IIT

SoftHand

(QB Hand) which has 1 DoF.

Using monocular RGB cameras significantly broadens the use

cases of our system, since they are the simplest and most

low-cost visual device that anybody can have access to. For

example, using our system an operator could teleoperate a

robot remotely using their laptop’s camera from their home.

This could help crowd-source expert trajectories for training

learning algorithms.

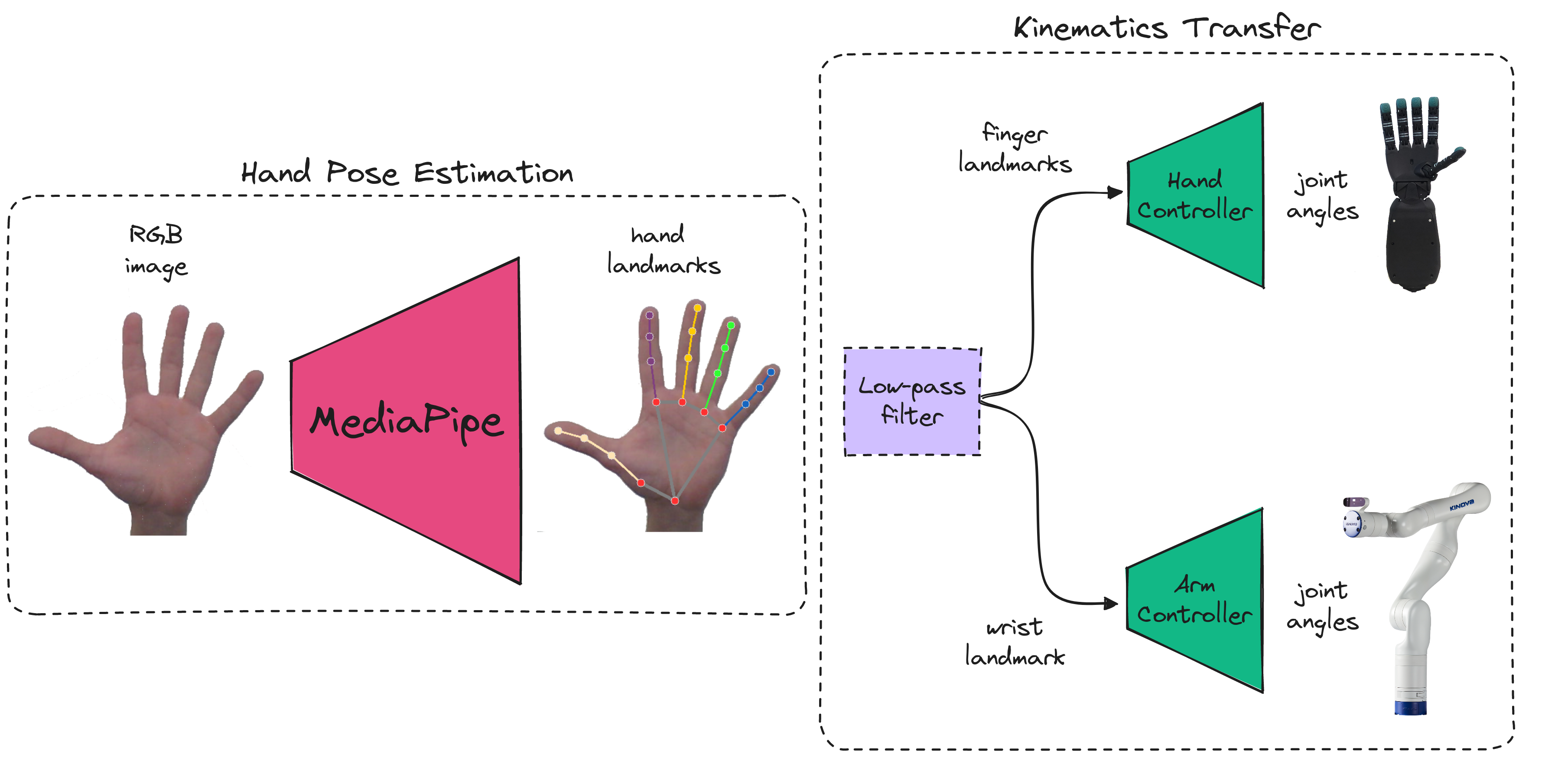

To achieve this, we use a hand pose estimation method that

estimates the 3D joint positions of the operator’s hand using

RGB images. We then compute the operator’s hand kinematics and

transfer them to the robotic hand. We use the joint angles of

the operator’s hand to control the robotic fingers and the wrist

position to control the robot’s end-effector. We demonstrate

the effectiveness of our system by presenting a series of

pick-and-place tasks, both in a simulated and a real-world

scenario.

Hand Pose Estimation#

Lately, several methods have been developed for 3D hand pose estimation using monocular RGB inputs. Most of them rely on neural network architectures that are trained on large datasets. Two popular solutions are the FrankMocap framework (Rong et al. 2021) and the MediaPipe Hands solution (Zhang et al. 2020) . FrankMocap can output also the 3D model of the hand using the hand deformation model MANO. On the other hand, MediaPipe estimates only the relative 3D joint positions of the hand. Both methods produce accurate estimations in most cases. However, in some preliminary experiments, FrankMocap seemed to confuse fingers in some challenging poses. In addition, FrankMocap runs at around 10 fps, while MediaPipe runs at around 30 fps on a desktop computer with an average GPU. Since we need the hand pose estimation method to run in real-time to reliably control the robot we opted to use the MediaPipe solution.

Kinematics Transfer#

After obtaining the hand landmarks the next step is to compute the operator’s hand kinematics and transfer them to the robotic system. The main component of the hand's kinematics is the finger’s positions, which can be represented by the joint’s angles or the fingertip’s positions. But, in order to perform more practical manipulation tasks, e.g. pick-and-place objects, opening containers, etc., that might be useful in more general environments, we need the hand to be able to move around in the space to interact with the objects in the environment. Consequently, we also need to transfer the 3D position of the hand in the space. Using this position, we can also teleoperate the robotic arm that the hand is mounted on.

Hand Controller#

There are several approaches for transferring the operator's

hand kinematics to the robotic hand, such as using inverse

kinematics (IK) to optimize keypoints of the robotic hand

to match the operator’s hand keypoints

(Handa et al. 2020),

or using some end-to-end solution to directly predict the robot joint angles

(Li et al. 2019).

However, using the inverse kinematic solution requires

accurate models of the robotic hands, which in our case,

do not exist. On the other hand, end-to-end solutions

require collecting training data which is time-consuming.

To this end, we opted for a simple angle-based mapping method,

which is fast and does not require any training.

We assume that the operator has visual

feedback of the teleoperated system, so they can adapt their

movements in cases of inaccuracies caused by the mapping

method. For the Seed Robotics hand that has 7 DoFs, 2 for the

wrist and 5 for the fingers, we chose five angles extracted

from the estimated pose to transfer to the robot. You can see

the joints that are used for the mapping in the Figure below.

For the Pisa\IIT Soft hand, which has only 1 DoF, we use the

average of all the joint angles of the operator's hand to

command it.

Arm Controller#

Controlling the arm of the robot can be done using only the 3D position of the wrist of the operator. The hand pose extraction methods presented above, estimate 2.5D coordinates for the hand landmarks. This means that we can only control the end-effector of the robotic arm in two directions, since we do not have enough information about the third. So, we chose to map the movements of the operator’s wrist in the horizontal and vertical direction in the 2D image to the $z$ and $x$ axis of the robot’s end-effector. In order to be able to control the robot in all three directions to make the system more useful in real-world scenarios, we track the second hand of the operator and map its movement on the horizontal axis to the robot’s $y$ direction. In practice, the robot and the operator’s hand start from a fixed position near the middle of their operational space, we then calculate small displacements of the wrist and transfer them to the robotic arm using an inverse kinematics controller.

Results#

We applied this method both in simulation and in a real-world setting. For the simulated tasks we developed one environment for each hand. For the Seed Robotics hand, we used three colored cubes on a table and the goal was to stack them on top of each other. You can see the execution of the task in the video below.

For the Pisa/IIT SoftHand, we used three household objects: an orange, a coke can, and a meat can and the goal was to move everything inside the tray on the side of the table. You can see the execution of the task in the video below. In this case, we also make use of the second hand to control the arm in the $y$ direction.

Finally, for the real-world experiments we used the Seed Robotics hand and the Kinova arm to perform a table cleaning task. We placed three small boxes on a table and a big cardboard box as their target position. The operator then teleoperated the robotic system using the vision pipeline to place all objects in the cardboard box.

Conclusion#

In summary, we developed a system for teleoperating a dexterous robot through monocular RGB input. Although we can control the robot to perform simple manipulation tasks, our system lacks the ability to control the orientation of the hand, which could be an interesting future engineering challenge. This project demonstrated that building such teleoperation systems can be fairly easy and cost-effective. Ongoing research in vision based teleoperation (Qin et al. 2023) can have provide multiple benefits for the field of robotic manipulation.

References#

[1] Arunachalam et al. “Dexterous Imitation Made Easy: A Learning-Based Framework for Efficient Dexterous Manipulation.” ICRA, 2023.[2] Sivakumar et al. “Robotic Telekinesis: Learning a Robotic Hand Imitator by Watching Humans on YouTube.” Arxiv, 2022.

[3] Rong et al. “FrankMocap: A Monocular 3D Whole-Body Pose Estimation System via Regression and Integration.” ICCVW, 2021.

[4] Zhang et al. “MediaPipe Hands: On-device Real-time Hand Tracking” CV4ARVR, 2020.

[5] Handa et al. “DexPilot: Vision-Based Teleoperation of Dexterous Robotic Hand-Arm System.” ICRA, 2020.

[6] Li et al. “Vision-based Teleoperation of Shadow Dexterous Hand using End-to-End Deep Neural Network.” ICRA, 2019.

[7] Qin et al. “AnyTeleop: A General Vision-Based Dexterous Robot Arm-Hand Teleoperation System” RSS, 2023.